Regulating AI: Businesses need to prepare for increasing risk of future disputes

Related people

Contents

With AI we face a combination of accelerating technological development and, depending on the jurisdiction, a greater or lesser degree of legislative intervention.

Artificial intelligence burst into our collective consciousness in November last year with the release of ChatGPT by OpenAI.

Experts and insiders have known about the advances in AI for a while, but many were awestruck by the speed and scale of its take-up. This has left business leaders and policymakers experiencing a mixture of excitement and anxiety about the possibilities.

As AI becomes more advanced, autonomous and pervasive, it raises new disputes risks, especially if, among the hype, and in the rush to market, developers have moved too fast and broken things.

Regulation, regulation, regulation?

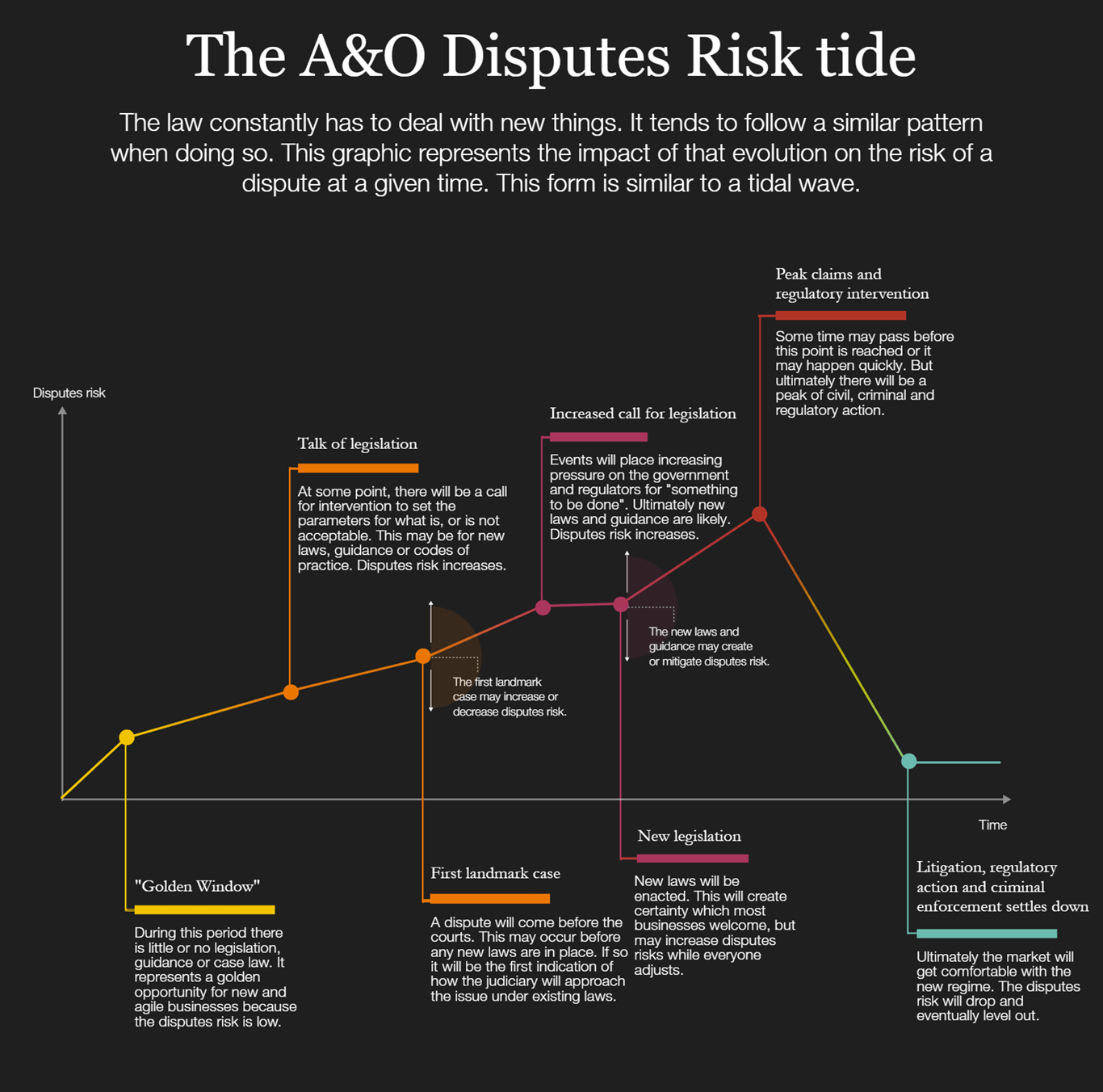

Allen & Overy has developed a model, which we have called the Allen & Overy Disputes Risk Tide, to illustrate the degree of exposure to disputes risks over time when something disruptive like AI butts against the law.

AI is currently exiting the “Golden Window” of opportunity. This is the period during which there is little or no AI-specific case law or regulation. This shift means that the legal risk has increased. At the same time, public consciousness of and concern about AI has increased. As the model shows, this typically results in a greater risk of disputes and a call for greater regulation.

Businesses that operate in highly regulated sectors, like finance, healthcare and transport, have to view AI deployment through the prism of their sector regulations. However, those that operate in unregulated sectors, like information technology, currently do not. They instead must consider more generally applicable laws like those that protect data privacy and the intellectual property rights of others.

This lack of sector- or AI-specific regulation may have been part of the reason for the release of AI-powered language models like ChatGPT into the wild for generalised use. Contrast that with the development of autonomous vehicles operating on public highways which has been very tightly controlled and is still not widely available. There is, in this specific example, perhaps an implicit acknowledgment that, in the eyes of the public and policymakers, risk of physical injury from a car crash remains a greater concern than the harder-to-pin-down risk of psychological injury from engaging with an AI chatbot.

The regulatory landscape is, however, changing. Although there is an increasing sense that some level of regulation is needed, different approaches and responses are being developed by policymakers around the world.

European Union

Buoyed, perhaps, by what it perceives as the success of GDPR – which, despite protestations from some, has effectively set the global standard for regulation of personal data – the EU plans to do the same with the general regulation of AI. The European Commission’s proposed AI Act, on which the Council presidency and European Parliament have recently reached provisional agreement, is the most comprehensive approach taken by a legislature to the challenge of AI.

The draft AI Act focuses on the level of risk a given implementation of AI technology could pose to the health, safety or fundamental rights of a person. The proposal is to have four tiers: unacceptable, high, limited and minimal.

Where the risks are limited and minimal, there will not be many requirements save for transparency obligations, which include that a person interacting with an AI system must be informed that that is what they are doing. If the risks are unacceptable (for example, social scoring and systematic real-time facial recognition), then a system will be prohibited. High-risk AI systems are permitted but are subject to rigorous scrutiny and oversight.

In the context of generative AI, this approach is not without its challenges. For example, generative AI models could be seen as high or limited risk depending on the use to which they are intended to be put. The solution proposed by the European Parliament is to make specific provision for “foundation models”.

In the interim, the European Commission is also proposing a voluntary “AI Pact” that the key actors agree to adhere to, pending the legislation coming into force. But even based on existing legislation, the EU’s approach has, for the first time, meant that some large tech companies have not initially offered their products to the region.

U.S. and UK

The U.S. with its “Blueprint for an AI Bill of Rights” and the UK with its whitepaper opted, initially at least, for a looser, principles-based approach, wanting to be more pragmatic and pro-AI innovation. It remains to be seen if this approach will last. As things stand, neither the U.S. nor the UK is currently planning to enact a single comprehensive piece of AI specific legislation as envisaged in the EU.

The White House’s “Blueprint for an AI Bill of Rights” is a non-binding whitepaper containing five principles identifying the need for:

- safe and effective systems

- protection against algorithmic discrimination

- data privacy

- notice to be given that AI is being used and an explanation of how and why it contributes to outcomes that have an impact on users

- an ability to call for human intervention

The Biden Administration has also obtained voluntary commitments from leading AI companies to manage the risks posed by AI and, the U.S. Congress has a number of pending AI legislative proposals before it. More recently, President Biden issued an Executive Order setting the stage for U.S. federal oversight of the development and use of AI.

The UK whitepaper sets out the following;

- safety, security and robustness – AI should function in a secure, safe and robust way and risks should be carefully managed

- transparency and explainability – organisations should be able to communicate when and how AI is used and explain a system’s decision-making process

- fairness – use of AI should be compliant with UK laws (such as the Equality Act 2010 or UK GDPR) and must not discriminate against individuals, or create unfair commercial outcomes

- accountability and governance – organisations should put in place measures to ensure appropriate oversight of the use of AI with clear accountability for the outcomes

- contestability and redress – there must be clear routes to dispute and for seeking compensation for harmful outcomes.

The UK is not currently proposing a single AI regulator or new overarching legislation, rather it intends to provide a framework to be implemented by the multiple existing regulators. Although the stated motivation for this is to foster innovation, it does also create uncertainty for businesses that will have to comply with it. Recent shifts in the UK government’s stance may mean legislation is to come.

China

The Cyberspace Administration of China’s (CAC) draft Measures on Managing Generative AI Services took perhaps the most prescriptive approach on this particular subset of AI, including extensive content and cybersecurity regulation alongside data protection. The more recent Interim Measures on Managing Generative AI Services appear more practical by comparison.

Fragmentation or unity?

The AI Safety Summit at Bletchley Park in UK represents one attempt to reach more of a global consensus in the vein of the work of the UN, the OECD and the G7.

Businesses will have different attitudes to disputes risks when it comes to AI. The disputes risks they may face around the world will be different in kind as well as degree. In the EU, they will face new all-embracing legislation. This should bring with it a degree of clarity, but it may also give rise to greater disputes risks. In the U.S. and the UK, for the moment, it seems largely that business will need to analyse new and fast-developing technology against the existing, non-AI-specific legal and regulatory framework. This approach brings its own challenges.

Ethical alignment

Related to regulation is the question of aligning an AI system with human goals, preferences or ethical principles.

The CAC measures, for example, require that content generated by AI “should reflect the core values of socialism” and should not “contain any content relating to the subversion of state power, the overthrowing of the socialist system, [or] incitement to split the country”.

In contrast, the draft EU AI Act seeks to protect the EU’s “values of respect for human dignity, freedom, equality, democracy and the rule of law and Union fundamental rights, including the right to non-discrimination, data protection and privacy and the rights of the child”.

In the short to medium term, the potential for tension between these two approaches is likely to reinforce market fragmentation as businesses seek to limit their exposure to disputes risks. Consequently, development of and advances in AI will not necessarily be uniform around the world.

Does AI have legal personality?

In the face of increasing regulation and the potential for civil liability, one question that is sometimes asked is whether the AI itself could be held accountable. In other words, does it have legal personality? This is the ability to sue and be sued.

The law can accord legal personality to non-natural persons as it has done in the case of companies. There is no technical reason why this cannot be done for AI. For example, in New Zealand, legislation declared a river, the Te Aw Tupua, to have legal personality as part of a settlement with the Māori.

But the question, in the case of AI, would be what is the need? In 2017, the EU unsuccessfully floated the idea of giving legal personality to AI. Although the legal construct of a corporation serves a clear purpose enabling the raising of capital and limiting liability, the same is not obviously true for AI. There are many ways you could analyse the question of whether AI should have legal personality: moral, political, utilitarian and arguments of legal theory. But, for the moment there seems to be no call for it.

What we are seeing instead are debates about whether any existing legislation gives AI some of the rights and responsibilities associated with legal personality.

A good example is in relation to patents. So far, courts and patent offices around the world have, almost without exception, refused to allow AI to be the named inventor of a patent. We know this largely because of the efforts of self-proclaimed AI pioneer Stephen Thaler who has filed patent applications around the world in the name of DABUS, rather than in his own name. The courts have typically come to this conclusion, on fairly technical grounds, based on the interpretation of the relevant statutory provisions, rather than as a value judgement.

In the case of copyright, the UK is unusual in having a provision expressly dealing with computer-created works. So, the author, in the case of a literary, dramatic, musical or artistic work which is computer-generated, is the person by whom the arrangements necessary for the creation of the work are undertaken. So not the AI itself.

Outside of specific instances like this, the idea of AI having legal personality, and the attendant disputes risks that may arise, seem limited in the short to medium term.

So, if not the AI, then who is liable? From an AI regulatory perspective, in the EU, the proposed AI Act would provide an answer for the specific obligations posed by that Act (whether it is the provider, authorised representative, importer, user or notified body); in the UK and the U.S., the answer will turn on whether the activity is regulated and, if so, by whom. Civil liability will be dealt with expressly by EU legislation. Again, for the U.K. and the U.S., it will be a question of falling back to general principles. Either way, whether by express AI legislation, or litigation based on existing laws applied to new technology, there will be a move up to the crest of the A&O Disputes Risk Tide.

Intellectual property and AI

Data is the lifeblood of AI. Generative AI models will have been trained on data that is protected by copyright. A disputes risk arises if there is no clear basis for the use of copyright material. If your business is the licensing of content, you will seek jealously to protect the rights in that content. A live example is Getty Images, which is suing Stability AI, in the UK and the U.S., for copyright infringement, pointing to the claimed reproduction of its watermark as evidence of the infringement. Different AI models pose different risks, but the legal tech sector is watching this and other similar litigation keenly.

There is also a difficult policy question as to how to calibrate copyright laws to foster AI innovation on the one hand and protect creative industries on the other. Legislatures take very different approaches to fair use, fair dealing, text and data mining exceptions as defences to copyright infringement claims.

Data protection and AI

We have already seen regulatory action being taken against AI in relation to the use of personal data: the Italian data protection authority’s temporary ban of ChatGPT. This was premised on a claimed lack of transparency, inadequate age verification and lack of a clear lawful basis for the perceived “massive collection and processing of personal data to ‘train’ the algorithms on which the platform relies”.

Other areas likely to give rise to dispute risks stemming from the use of personal data are bias and a lack of fairness. Fairness is a core part of the EU’s GDPR as well as the draft EU AI Act, so AI models that use personal data in a way that may have unjustified adverse effects on individuals or in way that data subjects would not reasonably expect are at risk of regulatory intervention.

Advertising – “mere puff” or misrepresentation?

We are seeing extraordinary claims about AI: what it can do, and by how much it can out-perform humans. Occasionally, it is unclear whether true AI is being used at all. When, inevitably, the hype dies down, there will be questions about misleading advertising, mis-selling, and in some cases dishonesty and fraud. We have seen the same thing with crypto-assets and other innovative technology before that. The legal remedies and the regulatory action often have a longstanding basis since the underlying human acts are largely unchanged.

As a result, the exposure to dispute risks in this case sits outside of the A&O Disputes Risk Tide model. The risk stems not from the technology or the degree of legal clarity, but from people behaving carelessly or dishonestly when promoting their products. Either way, we are likely to see commercial litigation, including potentially class actions, in the future.

AI and cybersecurity

AI systems, like any technology, can be vulnerable to hacking, spamming and cyberattacks. One line of defence is, ironically, to maintain a degree of human oversight and control over the systems and the data they generate.

In parallel, AI can also be used offensively by cyber-attackers to launch autonomous or coordinated attacks without human intervention, or to manipulate AI systems by feeding them fake or corrupted data for malicious purposes. These attacks can be hard to detect and counter.

The risk of AI-based cyberattacks is probably no greater than that faced by businesses all the time. The consequences of a breach and the attendant exposure to enforcement action or litigation are potentially of a different order to one that relates to more traditional technology.

Closing the Golden Window

With AI we are facing, simultaneously, rapidly accelerating technological development and, depending on the jurisdiction, a greater or lesser degree of actual or proposed legislative intervention.

Whichever way you cut it, this particular “Golden Window” of opportunity is closing. We are heading, inevitably, towards the crest of the A&O Disputes Risk Tide. And we are doing so at speed. In due course, we will see peak (and potentially divergent) claims and regulatory intervention before litigation, regulatory action and enforcement ultimately starts to settle down. From a legislative perspective, to give some idea of the potential time scale, the GDPR has been in force for five years, and it was quite a long time in the making before that.

Those businesses already exposed to AI disputes risks need to recalibrate their understanding of that exposure as those risks start to rise. Those not currently widely exposed and seeking to understand the consequences of adopting AI need to appreciate that while this tide is perfectly navigable, the disputes risk profile is evolving rapidly.

When the law and its target – in this case AI – are changing equally quickly, businesses need to consistently and continuously recalibrate their risk exposure.

Updated in November and December 2023